Hands On: a real-time adaptive animation interface with haptic feedback

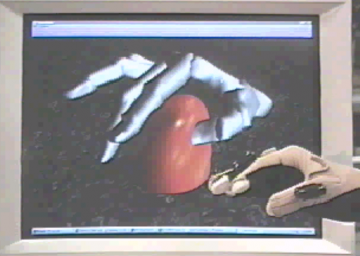

Intuitively controlling the hand of a virtual character or a robot manipulator is difficult. We developed Hands On, a real-time, adaptive animation interface, driven by compliant contact and force information, for animating contact and precision manipulations of virtual objects. […]

Synergies and Modularity in Human Sensorimotor Control

Human motor skills are remarkably complex because they require coordinating many muscles acting on many joints. A widely held, but controversial, hypothesis in neuroscience is that the nervous system simplifies learning and control using a modular architecture, based on modules called synergies. Previous evidence for modularity had been indirect, based on statistical regularities in the […]

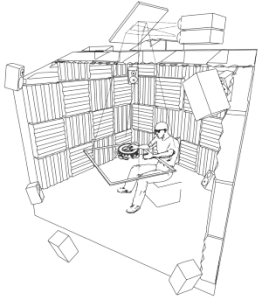

HAVEN: the Haptic Auditory and Visual ENvironment

The HAVEN was a facility for multisensory modeling and simulation, developed at Rutgers University by Prof. Pai to support multisensory human interaction in an immersive virtual environment. The HAVEN is a densely-sensed environment.[…]

Interaction Capture and Synthesis

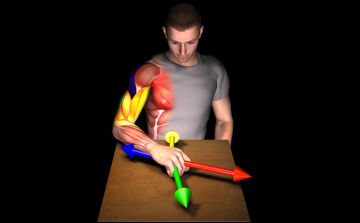

Controlling contact between the hand and physical objects is a major challenge for computer animation, virtual reality, and sensorimotor neuroscience. The compliance with which the hand makes contact also reveals important aspects of the movement’s purpose. We present a […]

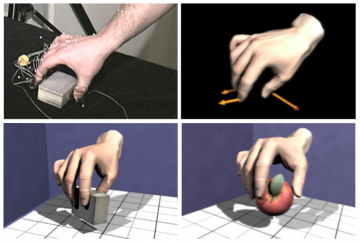

The Tango: a tangible tangoreceptive whole-hand human interface

We invented the Tango, a new passive haptic interface for whole-hand interaction with 3D objects. Several versions were built by Pai and Vanderloo between 1999 and 2001, including a wireless one with bluetooth. The Tango is shaped like a ball and can be grasped comfortably in one hand. Its pressure sensitive skin measures the contact […]

EigenSkin: Real-time Soft Articulated Body Skinning

We developed a technique, called EigenSkin, for real-time quasi-static deformation of articulated soft bodies such as human and robot hands. The deformations are compactly approximated by data-dependent eigenbases which are optimized for real time rendering with vertex programs on commodity graphics hardware. Animation results are presented for a very large nonlinear finite element model of […]

Real-time Soft Body Dynamics and Contact with GPUs

A long standing challenge had been fast simulation of realistic soft body dynamics, a computationally expensive task. In 2002, when the programmable graphics processor (GPU) was first introduced, we showed how precomputed modal deformation models could be efficiently mapped onto such massively parallel architectures with negligible main CPU costs. The precomputation is stored as a […]

ArtDefo: Real Time Elastostatics using Boundary Elements (BEM) and Precomputed Green’s functions

We developed a general framework for low-latency simulation of large linear elastostatic deformable models, suitable for real time animation and haptic interaction in virtual environments. The deformation is described using precomputed Green’s functions, and runtime boundary value problems are solved using Capacitance Matrix Algorithms. We introduced boundary element (BEM) techniques for precomputing Green’s functions, and […]

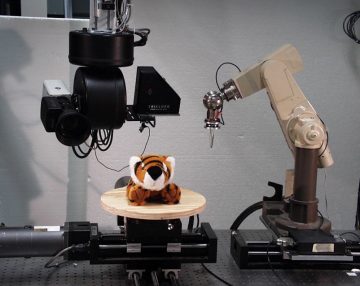

ACME: Scanning Physical Interaction Behavior of 3D Objects

Starting around 1998, we developed the UBC ACtive MEasurement facility (ACME), a telerobotic system for capturing comprehensive computer models of physical interaction behavior of real 3D objects. The behaviors we could successfully scan and model include deformation response, contact textures for interaction with force-feedback, and contact sounds. We also developed a novel software architecture for […]